The Evolving Data Architecture: Why Modern Enterprises Are Embracing the Lakehouse Paradigm

Modern enterprises are moving beyond outdated data systems. Learn how the lakehouse paradigm unifies analytics, reduces cost, and powers real-time decision-making—backed by real results and guidance from v4c.

Remember when managing data meant a few databases your IT team could handle without breaking a sweat? Modern businesses are no longer dealing with manageable datasets. They’re flooded with information from every direction, making it essential to streamline storage, manage data efficiently, and extract actionable insights.

At v4c, we have observed firsthand how organizations are rethinking their data architecture to remain competitive, and the shift toward the "lakehouse paradigm" is central to this evolution.

The Evolution of Enterprise Data Architecture

Traditional databases were built to handle structured, transactional data. They worked well when the data was limited and predictable. But as data volumes increased, these systems showed their limitations; they weren't built for today's diverse data ecosystems.

Data warehouses felt revolutionary when they arrived, allowing us to gather information from various sources for analysis. But they came with their own set of problems, expensive to scale and frustratingly rigid when incorporating new data types.

The big data era brought us data lakes, promising to store massive amounts of raw data in its native format. "Just dump everything in one place and figure it out later!" But without proper governance, these lakes quickly became "data swamps", places where information went to hide rather than provide insights.

The Lakehouse: Where All Your Data Lives Together

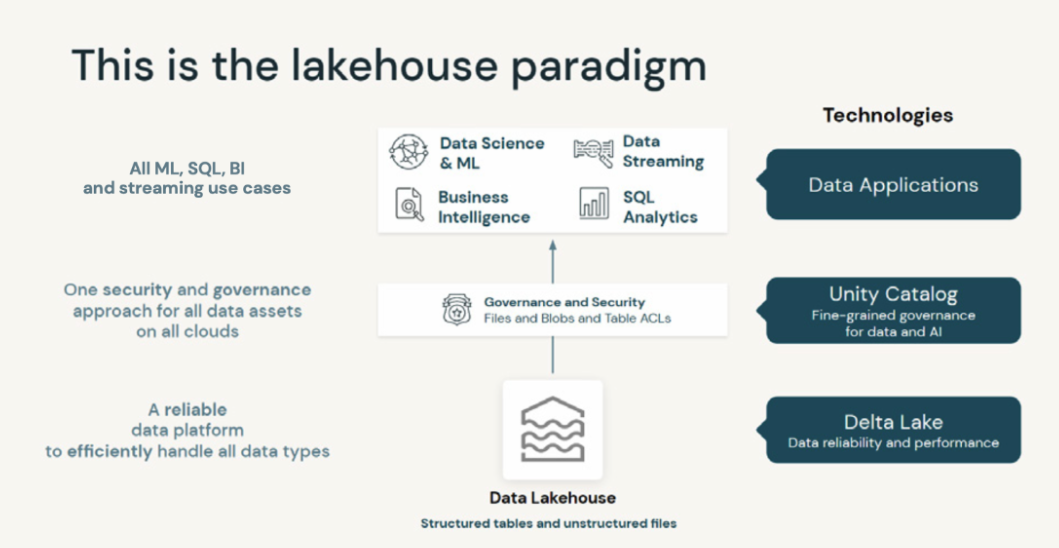

The data lakehouse represents a significant evolution in enterprise data architecture, seamlessly integrating the strengths of both data lakes and data warehouses to deliver superior business outcomes:

- Low-cost, flexible storage of a data lake

- The management, structure, and reliability of a data warehouse

- Direct access to raw data for everything from SQL queries to machine learning

- Performance that works for both traditional analytics and real-time applications

Think of the lakehouse as a strategic renovation: it preserves the proven strengths of legacy systems while introducing the modern capabilities required to meet today’s dynamic data demands.

Why Your Organization Should Care?

Here's why companies are embracing this approach:

- Everything Under One Roof

Data scattered across different systems creates real inefficiencies, customer information in one database, transactions in another, and website logs in yet another. We worked with a financial technology services company whose analysts spent 70% of their time just moving data between systems!

With a lakehouse, all your data lives together. Your marketing team and data scientists work from the same foundation, ensuring everyone sees the same version of the truth.

- Your Budget Will Thank You

Traditional data warehouses often lock you into expensive systems where storage and computing power are bundled together. One healthcare technology client saw costs spiral as their data grew, paying premium rates even for rarely accessed historical records.

The lakehouse approach changes this equation dramatically. By storing data on low-cost cloud storage and only using computing resources when needed, you could cut infrastructure costs by 40-70%. That's money you can reinvest in actually using your data rather than just maintaining it.

- Supporting All Your Data Needs

Modern organizations have diverse data requirements:

- Finance needs reliable reports

- Marketing wants interactive dashboards

- Data scientists need experimental environments

- ML engineers build and deploy models

- Operations teams need real-time insights

Before, you might have needed separate systems for each use case. A well-designed lakehouse supports all these workloads without duplication or complex integration.

- Keeping Your Data Clean and Compliant

The lakehouse addresses governance challenges by bringing warehouse-style management to lake storage:

- Enforce consistent data formats with schemas

- Implement validation rules for data quality

- Track changes with transaction logs

- Maintain compliance with access controls and audit trails

These capabilities are essential in today's regulatory environment.

- Freedom From Vendor Lock-in

Many lakehouse implementations use open standards and formats, offering strategic advantages. With formats like Apache Parquet and table formats like Delta Lake, Iceberg, or Hudi, you maintain complete control over your data assets. Your teams can use their preferred tools and you can adapt as technology evolves without painful migrations.

Making It Real: Implementation Considerations

Transitioning to a lakehouse isn't something you do overnight. Here are practical considerations:

- Take It Step By Step

Unless you're starting fresh, adopt a hybrid approach initially. We suggested to one of our clients to begin by moving customer analytics to a lakehouse while keeping financial reporting in their existing warehouse. This phased approach lets them gain experience before expanding.

- Invest in Your People

The lakehouse requires a blend of warehousing and big data skills. Budget for training and consider bringing in expertise to accelerate learning.

- Don't Neglect Performance Tuning

Achieving warehouse-like performance requires thoughtful design. How you partition data, organize files and optimize queries makes the difference between sub-second responses and frustrating delays.

- Build Governance From Day One

Without clear governance policies, you risk recreating the "data swamp" problem. Start with basic principles around data quality, access, and lifecycle management.

Real-World Transformation: One of our FinTech clients finds a Better Way

A fintech company approached us with a common challenge: painfully slow data processing.

Their pipeline, built in Python, had become a bottleneck, taking more than 24 hours to deliver insights from the previous day.

This delay was actively hurting their business:

"We're basically flying blind," their lead analyst explained. "By the time we get the data, the market has already moved on."

Their engineers added: "We spend entire days waiting for the pipeline to finish before we can validate changes."

We found several issues that a lakehouse architecture could address:

- Their Python code wasn't utilizing parallel processing

- They repeatedly loaded and transformed the same data

- Storage and compute resources were tightly coupled

Working closely with their team, we implemented a lakehouse using Delta Lake:

- Rewrote processing logic using Apache Spark for parallel execution

- Implemented checkpointing so intermediate results could be reused

- Converted data to Parquet format with optimized partitioning

- Added comprehensive metadata for lineage tracking and governance

The results were transformative. The 24-hour process now runs in just 45 minutes. Analysts have near real-time data, infrastructure costs have dropped by 60%, and development cycles have accelerated dramatically.

Beyond performance improvements, the lakehouse opened new possibilities. They implemented real-time risk scoring and fraud detection that wasn't possible before. Business teams can self-serve many analytics needs without engineering support.

One of the data scientists summed it up: "I used to spend 80% of my time wrangling data and 20% finding insights. Now those numbers are reversed."

How v4c Can Guide Your Lakehouse Journey?

If the above story sounds appealing, partnering with specialists like v4c can make all the difference. As an AI/ML consulting firm, v4c brings both technical expertise and business understanding to make your lakehouse implementation successful.

- Assessing Where You Are and Where You Need to Go

We start by understanding your current data landscape and business objectives. Each organization's journey is unique, and we create a tailored roadmap based on your specific situation.

- Choosing the Right Tools for Your Needs

The lakehouse ecosystem offers multiple implementation paths. We help you choose the right path based on your requirements, existing investments, and team capabilities.

For some clients, a solution based on open-source components makes sense. For others, a managed offering provides the right balance of capability and ease of implementation. We're technology-agnostic, focused on what will work best for your situation.

- Bringing Your Lakehouse to Life

Our specialists work alongside your team to implement the solution, focusing on knowledge transfer throughout.

- Unlocking Advanced Analytics and AI

Beyond building the data foundation, we help leverage it for advanced analytics and machine learning:

- Training models directly on lakehouse data

- Building feature stores for efficient development

- Implementing real-time scoring for operational decisions

- Applying MLOps practices to manage the AI lifecycle

- Building Your Team's Capabilities

Technology is only as good as the people using it. We emphasize upskilling your team through hands-on training, design pattern workshops, and documentation of best practices specific to your implementation.

- Evolving Your Architecture as Needs Change

The lakehouse journey doesn't end with initial implementation. As needs evolve, we provide ongoing support through architecture reviews, guidance on new use cases, and help adopting emerging capabilities.

Looking to the Future

Several trends are shaping the evolution of the lakehouse paradigm:

- AI Working Behind the Scenes

Modern data systems are starting to optimize themselves using AI, automatically adjusting storage layouts, tuning caching strategies, and managing resource allocation based on actual usage. These early implementations of AI-driven optimization are already in place and gaining momentum.

- Bridging History and Now

Next-generation lakehouses will make the boundary between historical and real-time analysis nearly invisible, enabling applications that blend historical context with in-the-moment insights.

- Solutions Tailored to Your Industry

We're seeing more domain-specific solutions built on lakehouse foundations – from retail analytics packages to healthcare data platforms that incorporate industry best practices.

- From Cloud to Edge and Back

Tomorrow's architectures will extend the lakehouse concept to create a continuous fabric from edge to cloud, allowing consistent data management regardless of where data lives or is processed.

It's About Your Business, Not Just Your Data

This evolution enables your business to operate as a truly data-driven organization, aligning people, processes, and technology around reliable, accessible data.

The lakehouse pays off when marketing and data science use the same customer data, analysts work with real-time insights, and developers deploy ML models in days, not months.

The FinTech case study illustrates this perfectly. Their transformed architecture fundamentally changed how they operated. Decisions that took days now happen in near real-time. Models that took weeks to deploy now go live in hours. Most importantly, their people spend time on high-value work rather than wrangling data.

Whether you're beginning to explore modern data architecture or building on existing investments, the lakehouse offers a practical path forward. With support from v4c specialists, you can reduce risk and reach value faster.

Better business outcomes start with better data. The lakehouse gives you the foundation to move faster, work smarter, and deliver real results.

References:

https://cloud.google.com/discover/what-is-a-data-lakehouse

https://learn.microsoft.com/en-us/azure/databricks/lakehouse/

https://www.starburst.io/blog/data-warehouse-data-lake-data-lakehouse/

https://cloudian.com/guides/data-lake/data-lakehouse-is-it-the-right-choice-for-you/

https://rivery.io/data-learning-center/what-is-a-data-lakehouse-the-essential-guide/

https://www.ibm.com/think/topics/data-warehouse-vs-data-lake-vs-data-lakehouse

https://sqream.com/blog/building-the-data-lakehouse/

https://www.dremio.com/topics/data-lakehouse/features/data-governance/

https://lakefs.io/blog/hudi-iceberg-and-delta-lake-data-lake-table-formats-compared/

https://datacrew.ai/data-lakehouses-pioneering-the-future-of-data-management/

.png)

.png)